In the last few years, whether it’s about fraud detection in financial institutions, product recommendations in e-commerce websites, preventive maintenance in manufacturing and supply chain, or enabling cars with self-driving capabilities, ML-based applications have made an impact everywhere. The availability of vast volumes of data sets for training machine learning (ML) models, on-demand computing power, and increased collaboration between data scientists and developers have made ML-based applications ubiquitous. It has also made organizations realize that the processes driving the implementation of machine learning in the business space now need to be more production-oriented, instead of being just research-oriented. The need for higher efficiency, stability and end-to-end visibility and control has led to an increased interest in Machine Learning Operations or MLOps.

What is MLOps?

MLOps refers to practices that help in the efficient development, testing, deployment, and maintenance of ML-based applications. While in theory, MLOps is similar to DevOps, which brings development and operations teams together, MLOps also brings data scientists and ML engineers into the mix. Data scientists collect the right data sets and ML engineers train the ML models on these data sets. Just like DevOps, the practices emphasize automation and monitoring of the entire ML lifecycle, which in turn can lead to continuous improvements in collaboration, faster time to market, and increased reliability and stability.

MLOps vs. DevOps

At the end of the day, MLOps and DevOps, are both software development practices. However, there are some major differences, largely because ML systems are remarkably different from software systems, in their development as well as their behavior.

| DevOps | MLOps | |

| Skills/roles | BA, developers/testers, site reliability engineers, QA, UX, release managers, etc. | Data scientists, ML engineers + DevOps roles |

| Development | Predictable – software systems are expected to behave in a deterministic manner based on a given set of conditions and input variables. The source code evolves over a period to improve performance, fix bugs, and introduce new features. | Experimental – requires testing of different models and algorithms, identifying what works best, and reusing the same techniques for similar outcomes in an automated manner. In addition to the source code, MLOps requires saving of data and model versions that can be reused and retrained. |

| Testing | Unit tests, integration tests, functional tests, acceptance tests, etc. | Testing also requires data validation, model validation, model quality evaluation |

| Deployment | Features are continuously developed and tested in different environments, released to production, and rolled back and released again after modifications in an automated manner. | Data scientists and ML engineers perform different manual steps to train and validate new models. It’s usually complex to automate these steps to retrain and deploy models in the production environment (also known as continuous training or CT). |

| Production | The software is usually impacted by known coding errors, vulnerabilities, or performance issues, which are resolved as the bugs are detected. | In addition to the usual factors that affect the software, ML systems’ performance also hinges on the data; changes in data profile can lead to unexpected performance issues. |

Benefits of MLOps

With MLOps, organizations can get the following benefits:

- Reduced Technical Debt: In a paper, Google argues that ML systems are more susceptible to incurring technical debt because, in addition to the traditional challenges of software, these systems also have ML-specific issues. MLOps provides a standard approach to reduce ML-specific technical debt at the system level.

- Higher Productivity: Earlier, ML engineers or data scientists used to deploy ML models in production. With MLOps, they can rely on the automation experience of DevOps professionals, while they focus on their core tasks. This approach significantly reduces the burden on the team and increases productivity.

- Reduced Time to Market: By automating the model training and retraining processes, MLOps effectively implements СI/CD practices for deployment and updating of the machine learning pipelines. This expedites the release cycles of ML-based products.

- Accuracy: With automated data and model validation, real-time evaluation of performance in the production, and continuous training against fresh datasets, ML-based models improve significantly over a period.

Are You Ready for MLOps?

If you are assessing your organization’s readiness for MLOps and are unsure about the different steps in the process, Google’s guide on the automation of ML pipelines can provide a head start. It advises three different approaches to MLOps depending on your organization’s maturity and requirement of ML algorithms.

- MLOps Level 0: It is recommended for organizations that have in-house data scientists that can rely on manual ML workflows. If your organization needs to update ML models less frequently (less than once a year), you can explore this approach.

- MLOps Level 1: This approach requires a pipeline for continuous training. The pipeline automatically validates data and models and triggers retraining whenever there is a performance degradation or availability of new data sets. It can be useful for businesses that need to update models based on frequently changing market data.

- MLOps Level 2: This approach goes one step further, automating both the ML training pipelines as well as the deployment of ML models in the production. It is recommended for tech-driven organizations who need to update their models on an hourly basis and also make deployments across multiple servers simultaneously.

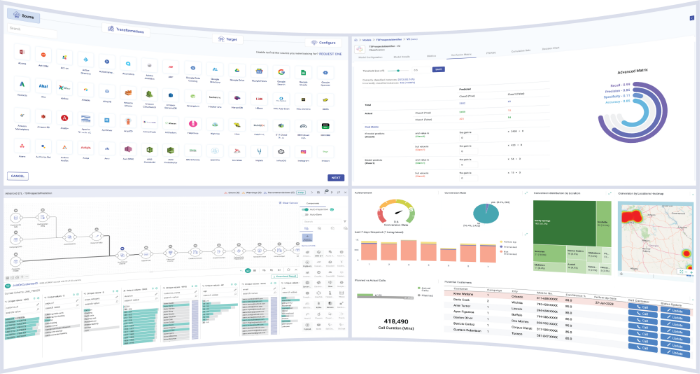

Gathr helps software teams enhance automation and monitoring across their CI/CD pipelines with several out-of-the-box and custom apps.

Table of Contents

Recent Posts

View more postsBlog

Blog

Blog

Blog