According to Gartner1, through 2024, 50% of organizations will adopt modern data quality solutions to better support their digital business initiatives. As enterprises work towards modernizing their data management infrastructure, data integration remains a key focus area. The data integration process brings together data from multiple systems, consolidates it and delivers it to a modern data warehouse or data lake for various analytical use cases. While there is no one approach to data integration, typical steps include data ingestion, preparation, and ETL (extract, transform, load). This blog outlines some of the most common data integration pitfalls and discusses strategies to avoid them.

#1 Inadequate data quality checks

With massive volumes of structured and unstructured data being generated from databases, CRM platforms, and applications every day, it is vital to properly qualify data as part of the integration process. Many source and legacy data systems provide “unclean data” containing corrupted, incorrect, and irrelevant records. These records need to be identified, standardized, modified, or deleted depending on business needs. Data teams should perform thorough quality checks throughout the ETL lifecycle, reconcile source-to-target data loads and use a logging methodology that accurately identifies errors and tracks quality concerns. Without thorough cleansing and profiling, data integration remains subject to the adage “garbage in, garbage out”.

#2 Building for short-term goals

Data integration enables multiple users and teams across the enterprise to access and understand the information needed for making effective business decisions. It is, therefore, important to build a sustainable, scalable data integration solution that can easily handle changing data velocities and volumes. A powerful ETL solution not only factors in current requirements, but also enables effortless addition of new data formats and layouts that may emerge in the future. Another key factor to consider is long-term cost efficiencies. Enterprises should avoid designing a system that becomes expensive to maintain in the long run. To understand long-term technology and business goals, all major stakeholders across the enterprise should be interviewed before investing in a data integration tool/building a home-grown solution. Additionally, an open, interoperable solution architecture can help businesses adapt to ever-changing technologies and avoid disruption in the future.

#3 Lack of real-time capabilities

Most enterprise use cases require real-time or near-real-time data collection. Unfortunately, batch-based data integration works only when users can wait to receive and analyze the data. For businesses dealing in time-sensitive operations, it’s important to invest in tools with automated, real-time data integration capabilities. These use the latest paradigms to transform and correlate streaming data and make it consumable the moment it’s written to the target platform. It can help analysts save valuable time and effort in harnessing the data.

#4 Underestimating changing data velocities

In the digital era, data integration is never a one-time process – it is ongoing. Therefore, enterprises must have frameworks in place to efficiently acquire, transform, and move increasingly fast data. Waiting for data to be loaded into a legacy reporting tool is no longer an option. A robust data integration solution should support changing velocities for batch and streaming datasets of all sizes. It should also handle event-based integration rather than clock-driven. This helps businesses respond to events in real time and improve the customer experience.

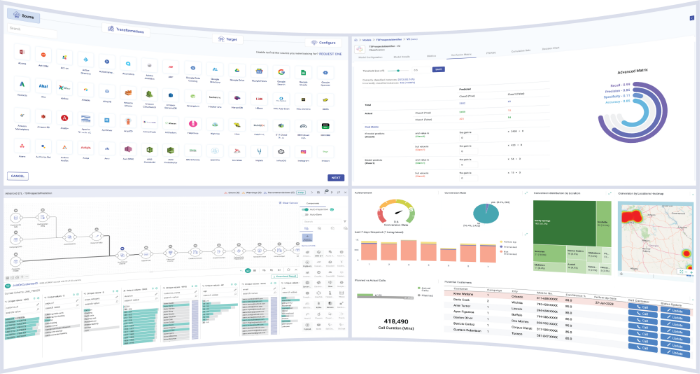

Data integration is a major step towards realizing a 360-degree view of the customer and transforming this information into valuable insights. Yet most data integration projects are extremely complex to plan and execute. We recommend choosing a modern, cloud-native data integration tool that is easy to use, provides a variety of pre-built connectors, and offers a no-code visual interface to build data pipelines. Gathr, as the world’s first and only data-to-outcome platform, offers all these features and more. To get a first-hand experience, start your free 14-day trial today.

Recent Posts

View more postsBlog

Blog

Blog

Blog