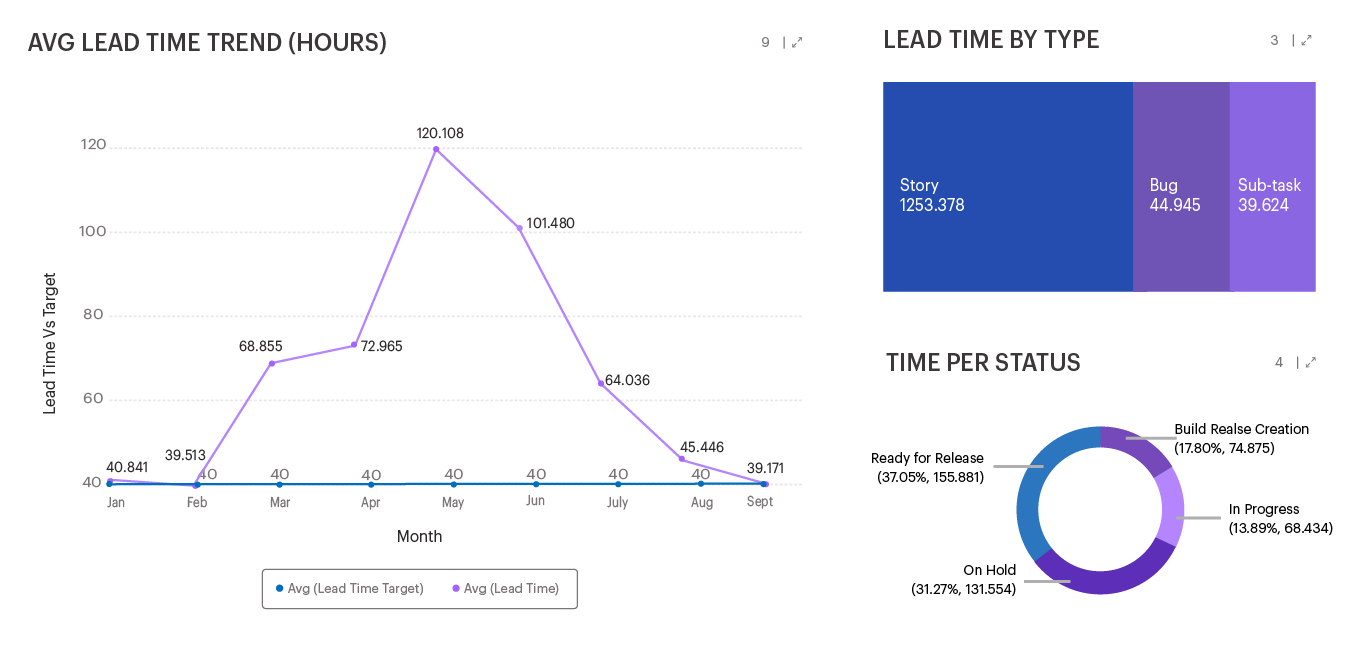

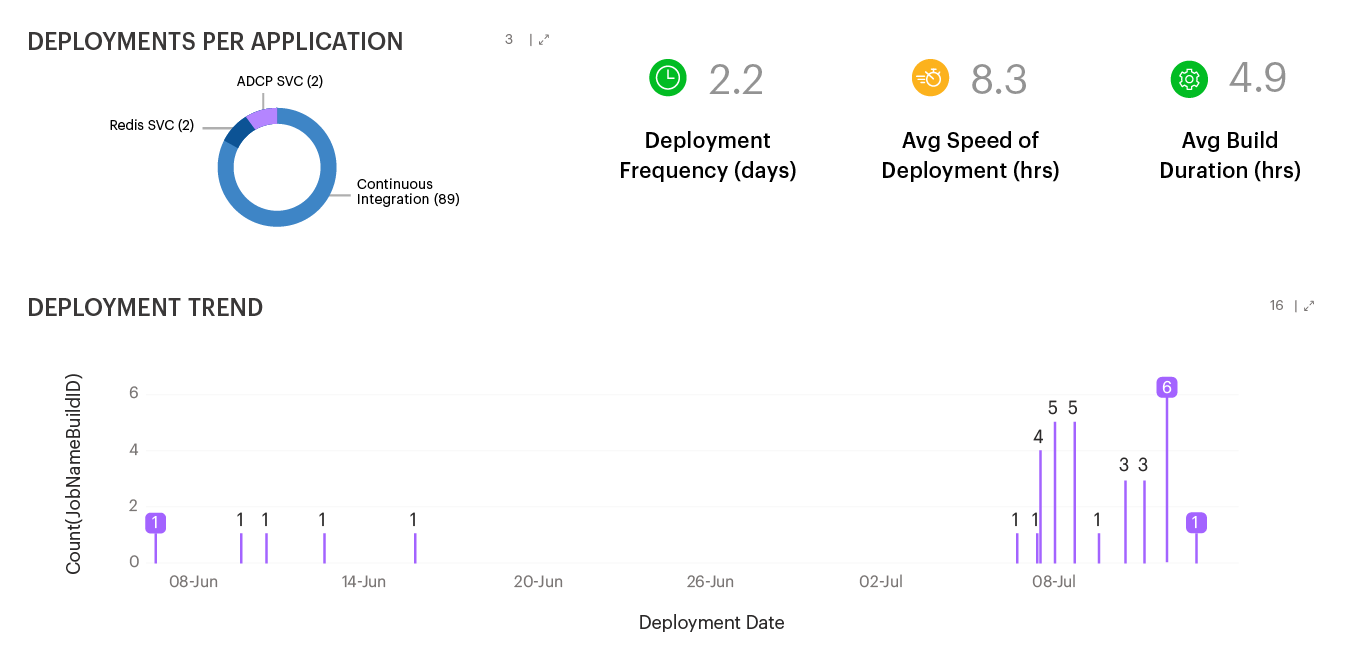

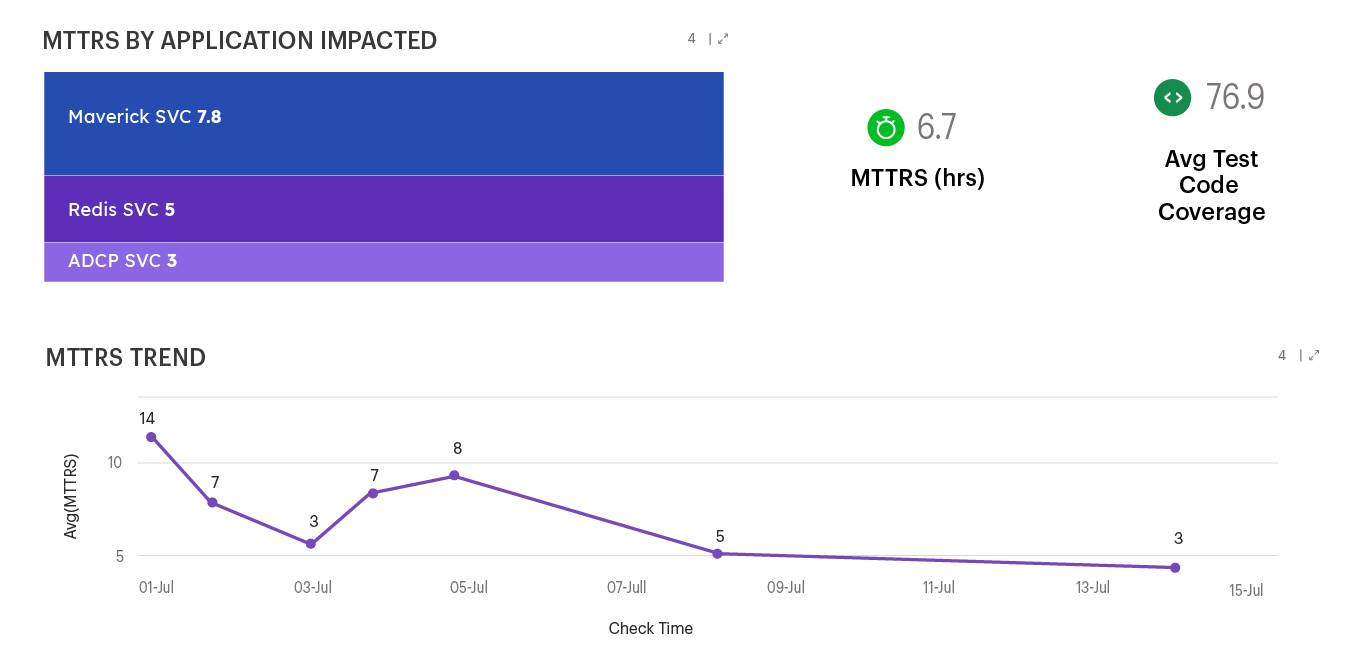

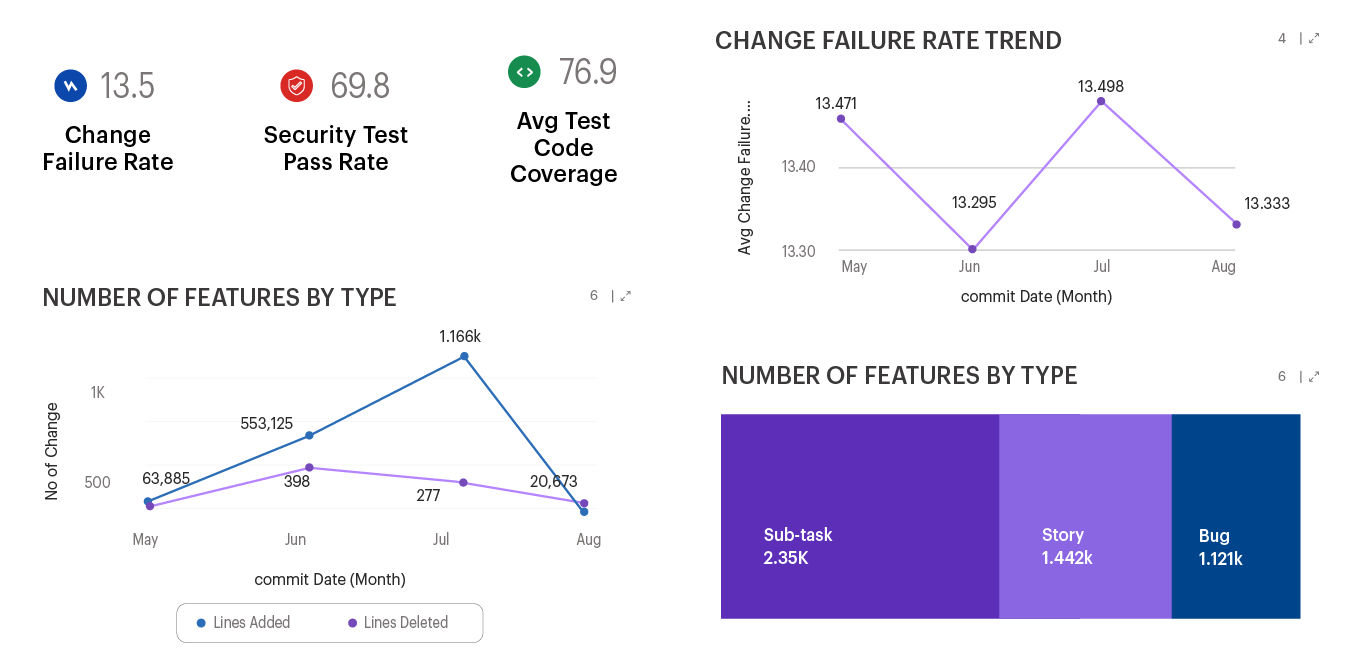

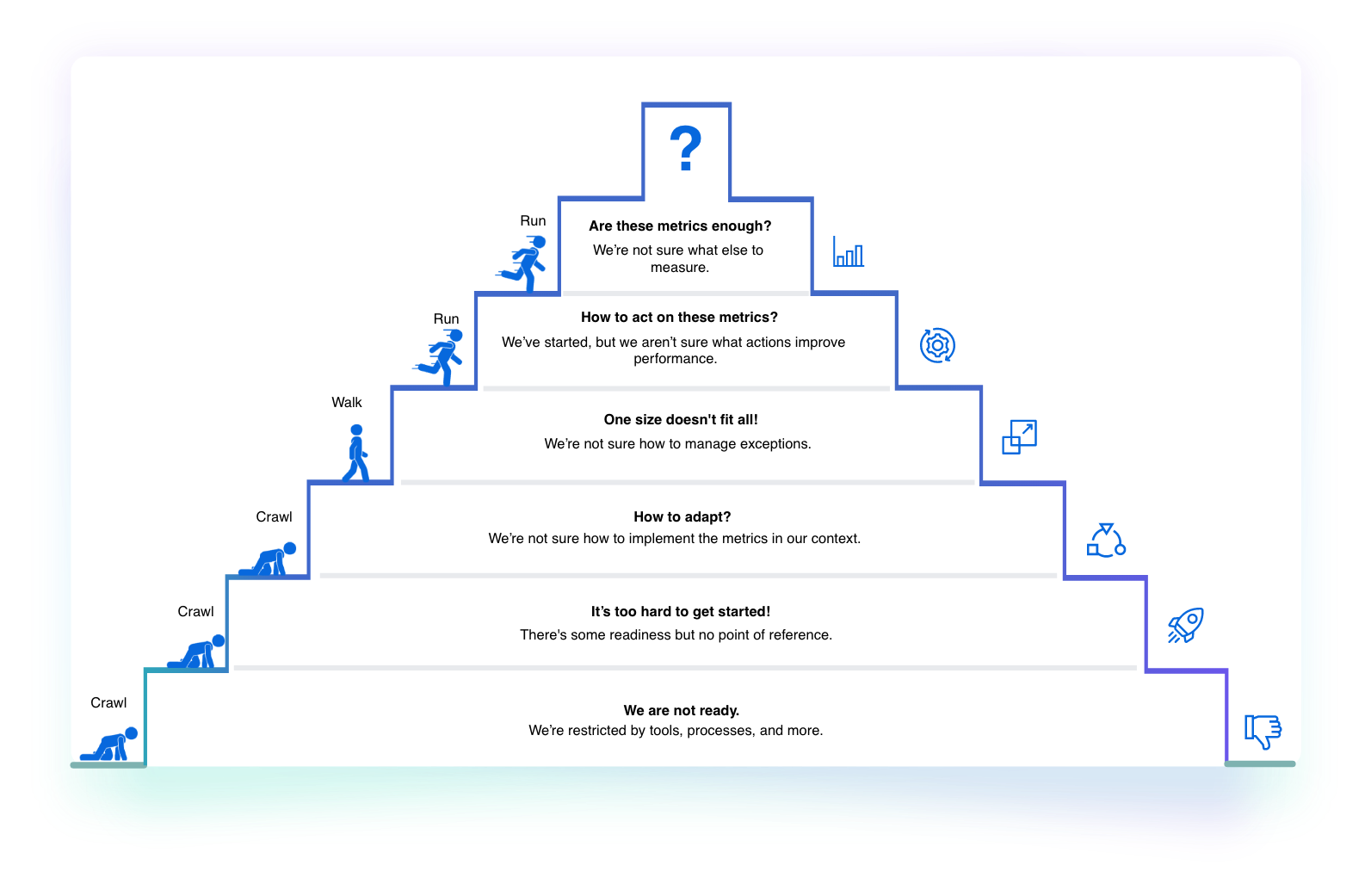

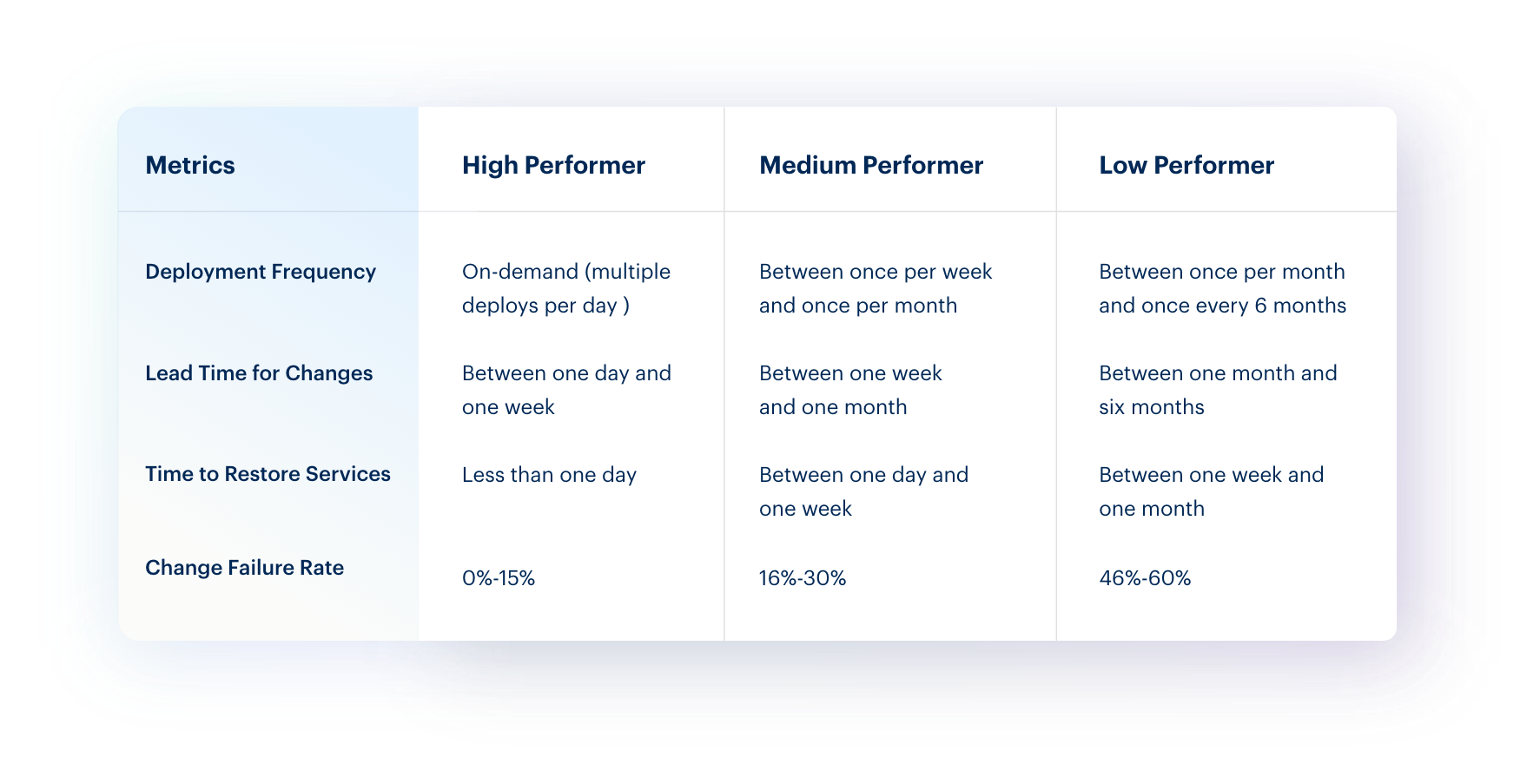

While most organizations have embraced DevOps developing the right culture, establishing communication channels, and implementing advanced tools, they are still unable to assess the success of their DevOps initiatives. DevOps and engineering leaders recognize DORA metrics as a standard framework for measuring DevOps success. DORA metrics help them measure software delivery throughput (speed) and reliability (quality) accurately. By analyzing the DORA KPIs, teams can easily gain insights into performance trends, detect issues across different stages of DevOps, and take remedial actions to deliver better software, faster. For instance, by tracking deployment frequency, organizations can observe if their teams have improved over a period, remained consistent, or experienced extreme deviations. Similarly, a higher change failure rate can indicate issues with change management, quality testing, and more. With a DORA metrics dashboard, organizations can easily visualize DORA metrics, detect process bottlenecks, perform root cause analysis, and take action for continuous improvements in DevOps.