WEBINAR

Despite investments in big data lakes, there is widespread use of expensive proprietary products for data ingestion, integration, and transformation (ETL) while bringing and processing data on the lake.

However, enterprises have successfully tested Apache Spark for its versatility and strengths as a distributed computing framework that can handle end-to-end needs for data processing, analytics, and machine learning workloads.

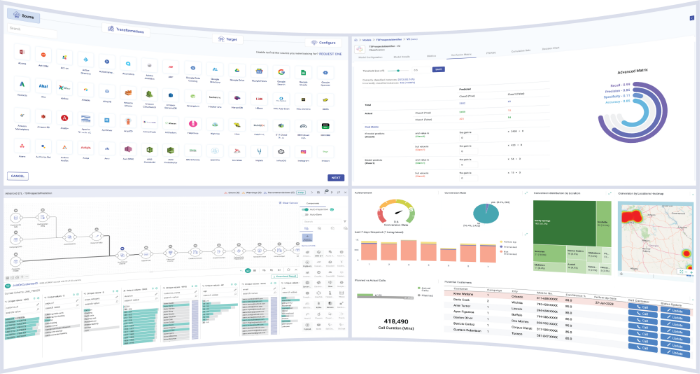

In this webinar, we will discuss why Apache Spark is a one stop shop for all data processing needs. We will also demo how a visual framework on top of Apache Spark makes it much more viable.

The following scenarios will be covered:

On-Prem

-

Data quality and ETL with Apache Spark using pre-built operators

-

Advanced monitoring of Spark pipelines

On Cloud

-

Visual interactive development of Apache Spark Structured Streaming pipelines

-

IoT use case with event-time, late-arrival and watermarks

-

Python based predictive analytics running on Spark